Artificial intelligence (AI) has the potential to revolutionize the way we approach medical diagnosis, treatment, and patient care. From machine learning algorithms that can analyze medical imaging with incredible accuracy to streamlining clinical workflows and reducing administrative burden, AI is already making a significant impact in healthcare. And, the recent developments in large language models (LLMs) have introduced new applications that go inside the exam room and started new conversations about how the new technology changes clinician's roles - or doesn’t.

AI will change the way we diagnose patients. It will change the way we treat patients. It will change the way we make new discoveries. Its presence will be more ubiquitous than the stethoscope. And, like any new technology in medicine — particularly ones as fundamental as this — it’s worth understanding how the technology works before it ends up in front of us and our patients. So in this blog, we'll define the fundamental tenets of AI and what the future might hold for this exciting field.

Artificial Intelligence

"Artificial intelligence is getting computers to do things that traditionally require human intelligence..."

- Pedro Domingos, Professor of Computer Science and Engineering, University of Washington

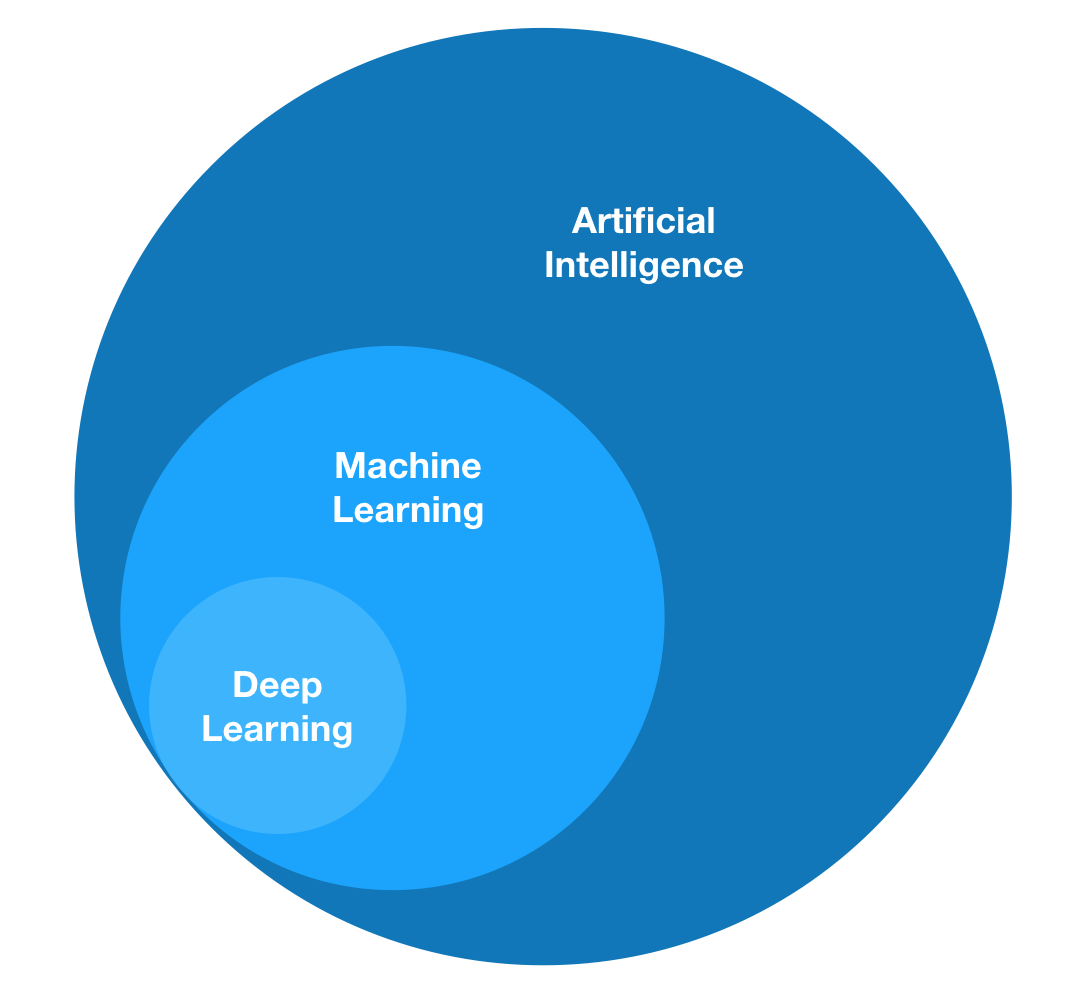

Artificial intelligence is a field within computer science. It’s a multidisciplinary field concerned with understanding and building intelligent entities, often in the form of software programs. Its foundations include mathematics, logic, philosophy, probability, statistics, linguistics, neuroscience, and decision theory. Many subfields also fall under the umbrella of AI, such as computer vision, robotics, machine learning, and natural language processing. Putting it all together, the goal of this constellation of fields and technologies is to get computers to do what had previously required human intelligence.

The concept of AI has been in the heads of dreamers, researchers, and computer scientists for at least 70 years. The computer scientist, John McCarthy, coined the term and birthed the field of AI when he brought together a group of pioneering minds for a conference at Dartmouth College in the summer of 1956 to discuss the development of machines that possessed characteristics of human intelligence. Over the decades, the field has gone through periods of optimism and pessimism, booms and busts, though it seems that in the last 10 years we’ve reached something of an inflection point in our ability to realize AI.

Machine Learning

"[Machine learning is a] field of study that gives computers the ability to learn without being explicitly programmed."

- Arthur Samuel, pioneer in Artificial Intelligence, coined the term ‘machine learning’ in 1959

Machine learning is a subfield of artificial intelligence. Its goal is to enable computers to learn on their own. Machine learning has been one of the most successful approaches to artificial intelligence, so successful that it is much to credit for the resurgence of its parent-field in recent years. Machine learning at its most basic is the practice of using algorithms to identify patterns in data, to build mathematical models based on those patterns, and then to use those models to make a determination or prediction about something in the world. This is different from the usual way of doing things. Traditionally, the way you’d get a computer to do anything was for humans to tell it, line-by-line in the form of human-authored computer code.

Machine learning is different. It doesn’t require step-by-step guidance from a human, because its learning algorithms guide computers to learn it all on their own. Think of this algorithmic learning process as the embodiment of the old adage that, "There is no better teacher than experience," but in the case of machine learning, the teacher isn’t human, the teacher is data — lots of data. So, when we entered the Age of Big Data, suddenly there was plenty of digitized data to go around. This flood of data combined with exponential increases in computing power were the one-two punch that made machine learning the first AI-approach to truly blossom.

Deep Learning and Neural Networks

"AI [deep learning] is akin to building a rocket ship. You need a huge engine and a lot of fuel. The rocket engine is the learning algorithm but the fuel is the huge amounts of data we can feed to these algorithms."

- Andrew Ng, Global leader in AI

Deep learning is a subset of machine learning. It is a technique for implementing machine learning. Like the rest of machine learning, deep learning uses algorithms for finding useful patterns in data, but deep learning is distinguished from the broader family of machine learning methods based on its use of artificial neural networks (ANNs) — an architecture originally inspired by a simplification of neurons in a brain.

Artificial neural networks don’t really work like neurons in our brain. Think of an ANN as a very complex math formula made up of a large number of more basic math formulas that "connect" and "send signals" between each other in ways that are reminiscent of the synapses and action potentials of biologic neurons.

Deep learning has advantages over traditional machine learning by virtue of its ability to handle more complex data and more complex relationships within that data. It can often produce more accurate results than traditional machine learning approaches, but it requires even larger amounts of data to do so. One reason deep neural networks can do this is because of their depth. The 'deep' in deep learning refers to its mathematical depth — the number of layers of math formulas that make up the more complex math formula that is the neural network in its totality.

Over the last 10 years, deep neural networks of one design or another have been behind many of the major advancements in AI, not only in computer vision (e.g., face recognition, automated interpretation of medical imaging, etc.), but also in natural language processing, understanding, and generation (e.g., digital assistants like Apple’s Siri, Google Translate, etc.).

Noteworthy examples of deep learning:

- Face and object recognition in photos and videos

- Automated interpretation of medical imaging, e.g., x-rays, pathology slides

- Self-driving cars

- Google search results

- Natural language understanding and generation, e.g., Google Translate

- Automatic speech recognition, e.g., voice assistants like Siri and Alexa, live captioning

- Predicting molecular bioactivity for drug discovery

Generative AI and Large Language Models

"A generative model can take what it has learned from the examples it’s been shown and create something entirely new based on that information."

- Douglas Eck, research lead on the Google DeepMind team

Some of the most exciting advancements in the last decade have been in language models. The single biggest change has come through transformers, which is a deep learning model architecture that makes it possible to train useful models without labeling the data in advance. These models can be more efficiently trained on billions of pages of text. The combination of more data and deeper models has brought us closer to the fundamental promise of AI - getting computers to do things that traditionally require human intelligence, including responding to questions with accurate and natural sounding language.

Transformers also form the fundamental building blocks for generative AI and large language models (LLMs) that have captured the recent conversation about AI and language generation. Generative AI can be used by chatbots to write responses to questions, create images from descriptions, write music in a particular style or tone, or even to generate synthetic data to train AI algorithms. LLMs are an example of generative AI that’s used to create text with natural sounding language.

With clinicians spending 2 hours on administrative work for every hour of patient care, this technology has the potential to make a big impact. Solutions using LLMs can be used to help summarize patient encounters and automate form filling, saving hours of time every day and helping clinicians focus on patients during the encounter. But LLMs alone can’t create accurate clinical notes. They need large clinical datasets and tuning - and ultimately the oversight of the clinician using the tool to verify the accuracy of the generated text before submitting it to the patient record. As clinicians, we don’t need to think of these tools as threats to replace our years of training, experience, and expertise. The promise of these tools is to assist us in our work, not turn us into passive consumers of output, and it’s our responsibility to shape the policies and ways we use AI to avoid its hazards.

Some current and potential applications of AI in medicine:

Basic biomedical research | Translational research | Clinical practice |

Automated experiments | Biomarker discovery | Disease diagnosis |

Automated data collection | Drug-target prioritization | Treatment selection |

Gene function annotation | Drug discovery | Risk stratification |

Predict transcription factor binding sites | Drug repurposing | Remote patient monitoring |

Simulation of molecular dynamics | Prediction of chemical toxicity | Automated surgery |

Literature mining | Genetic variation annotation | Genomic interpretation |

| | | Automated note generation |

| | | Digital health coaching |

Adapted from: Kun-Hsing Yu, Andrew L Beam, and Isaac S Kohane. 2018. "Artificial intelligence in healthcare." Nature Biomedical Engineering, 2 (10), 719 and Lin, Steven. 2022. "A Clinician's Guide to Artificial Intelligence (AI): Why and How Primary Care Should Lead the Health Care AI Revolution." The Journal of the American Board of Family Medicine January 2022, 35 (1) 175-184.

The Responsibility and Promise of AI

Let me pause to instill a bit of humanity, camaraderie, and motivation for this educational journey. The mission to better understand these technologies and their application in medicine is critically important, and extremely exciting. We are very fortunate to be the ones at the forefront of medicine at the precise time that these technologies are maturing and transitioning from bench to bedside. Of course, with this tremendous opportunity comes tremendous responsibility, and it is our generation’s responsibility to ensure that we harness the promise of AI in healthcare to serve the common good.

In order to fulfill this responsibility, we have to go beyond the abstractions of a philosopher in an armchair and understand the technology at a deeper level. We need to engage with the details of how machines see the world — what they "want", their potential biases and failure modes, their temperamental quirks — because only then can we intelligently shape our roadmaps and policies with respect to AI. We need a grasp of the fundamental principles, just like we did when we learned about antibiotics in medical school. And, since none of us had AI as part of our medical school curriculum, we have a bit of work to do. But, this kind of responsibility is something that we in healthcare are quite accustomed to. It is our job, and it is what we love.

Editor’s note: This post was originally published in February 2019 and has been updated to incorporate the latest advances in AI.

Quote Sources

Pedro Domingos quoted in Reese, Byron. “Voice in AI - Episode 23: A Conversation with Pedro Domingos.” GigaOm, Dec 4, 2017, https://gigaom.com/2017/12/04/voices-in-ai-episode-23-a-conversation-with-pedro-domingos/.

Samuel, A. L. (1959). Some studies in machine learning using the game of checkers. IBM Journal of research and development, 3(3), 210-229.

Andrew Ng quoted in Kelly, Kevin. “Cognifying.” The Inevitable: Understanding the 12 Technological Forces That Will Shape Our Future, Viking Press, 2017, page 68-69.

Douglas Eck quoted in “Ask a Techspert: What is generative AI?” April 11, 2023. https://blog.google/inside-google/googlers/ask-a-techspert/what-is-generative-ai/